ARFS - How to use with large data?#

It might be than the data set is too large for All Relevant Feature Selection to run in a reasonable time. Usually random sampling (stratified, grouped, etc) solves this issues. If extreme sampling is needed, ARFS provide two methods for decreasing drastically the number of rows. The sampling methods and outcomes are illustrated below.

[1]:

# from IPython.core.display import display, HTML

# display(HTML("<style>.container { width:95% !important; }</style>"))

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import gc

from sklearn.pipeline import Pipeline

import arfs.feature_selection as arfsfs

import arfs.feature_selection.allrelevant as arfsgroot

from arfs.preprocessing import OrdinalEncoderPandas

from arfs.benchmark import highlight_tick, compare_varimp, sklearn_pimp_bench

from arfs.utils import load_data

from arfs.sampling import sample

# plt.style.use('fivethirtyeight')

rng = np.random.RandomState(seed=42)

import warnings

warnings.filterwarnings("ignore")

Using `tqdm.autonotebook.tqdm` in notebook mode. Use `tqdm.tqdm` instead to force console mode (e.g. in jupyter console)

[2]:

import arfs

print(f"Run with ARFS {arfs.__version__}")

Run with ARFS 2.0.5

[3]:

%matplotlib inline

[4]:

gc.enable()

gc.collect()

[4]:

4

Sampling data#

A fairly large data set for illustration

[5]:

# X, y, w = _generated_corr_dataset_regr(size=100_000)

housing = load_data(name="housing")

X, y = housing.data, housing.target

X = pd.DataFrame(X)

X.columns = housing.feature_names

y = pd.Series(y)

X.head()

[5]:

| MedInc | HouseAge | AveRooms | AveBedrms | Population | AveOccup | Latitude | Longitude | |

|---|---|---|---|---|---|---|---|---|

| 0 | 8.3252 | 41.0 | 6.984127 | 1.023810 | 322.0 | 2.555556 | 37.88 | -122.23 |

| 1 | 8.3014 | 21.0 | 6.238137 | 0.971880 | 2401.0 | 2.109842 | 37.86 | -122.22 |

| 2 | 7.2574 | 52.0 | 8.288136 | 1.073446 | 496.0 | 2.802260 | 37.85 | -122.24 |

| 3 | 5.6431 | 52.0 | 5.817352 | 1.073059 | 558.0 | 2.547945 | 37.85 | -122.25 |

| 4 | 3.8462 | 52.0 | 6.281853 | 1.081081 | 565.0 | 2.181467 | 37.85 | -122.25 |

Cleaning the data#

[6]:

basic_fs_pipeline = Pipeline(

[

("missing", arfsfs.MissingValueThreshold(threshold=0.05)),

("unique", arfsfs.UniqueValuesThreshold(threshold=1)),

("cardinality", arfsfs.CardinalityThreshold(threshold=10)),

("collinearity", arfsfs.CollinearityThreshold(threshold=0.75)),

]

)

X_trans = basic_fs_pipeline.fit_transform(X=X, y=y)

# collinearity__sample_weight=w,

# lowimp__sample_weight=w)

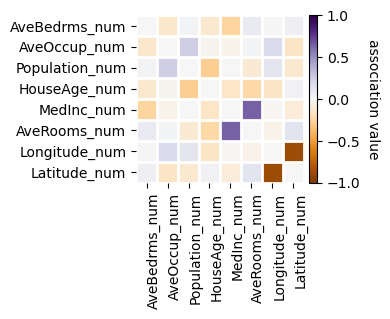

[7]:

f = basic_fs_pipeline.named_steps["collinearity"].plot_association(figsize=(4, 4))

[8]:

data = X_trans.copy()

data["target"] = y.values

[9]:

print(f"The dataset shape is {data.shape}")

The dataset shape is (20640, 7)

Random sampling#

You can sample using pandas or scikit-learn (providing more advanced random sampling, as stratified sampling, depending on the task).

[10]:

data_rnd_samp = data.sample(n=1_000)

print(f"The sampled dataset shape is {data_rnd_samp.shape}")

The sampled dataset shape is (1000, 7)

Sampling by clustering the rows#

Using the Gower distance (handling mixed-type data), the rows are clustered and the average/mode for each column is returned. The larger the number of clusters, the closer to the original data. As it requires the computation of a distance matrix, you might need to use random sampling first to avoid a computational bottleneck.

[11]:

%%time

data_dum = data.sample(n=10_000)

data_dum = data.copy()

# Compute a distance matrix, 10_000x10_000 and use it for clustering (1_000 clusters)

data_g_samp = sample(df=data_dum, n=1_000, sample_weight=None, method="gower")

print(f"The sampled dataset shape is {data_g_samp.shape}")

The sampled dataset shape is (1000, 8)

CPU times: user 49.1 s, sys: 12.4 s, total: 1min 1s

Wall time: 1min 2s

Sampling by removing outliers#

This method is adapted from BorutaShap. It uses IsolationForest to remove the less similar samples and iterates till the 2-sample KS statistics is > \(95\%\) (for having a similar distribution than the original data). There is no guarantee of the output size.

[12]:

%%time

data_isof_samp = sample(df=data, sample_weight=None, method="isoforest")

print(f"The sampled dataset shape is {data_isof_samp.shape}")

The sampled dataset shape is (2064, 7)

CPU times: user 399 ms, sys: 13.3 ms, total: 413 ms

Wall time: 449 ms

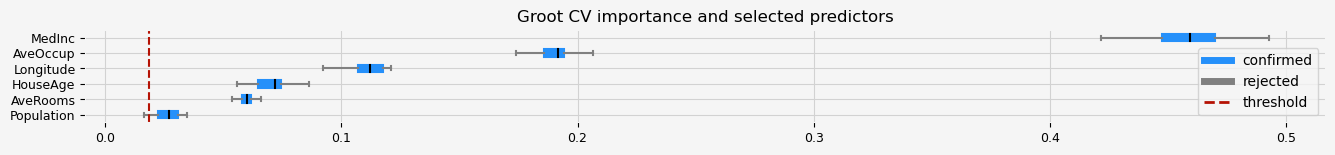

Impact on the feature selection#

Let’s perform the feature selection on this toy data set to see what is the impact of the different sampling strategies.

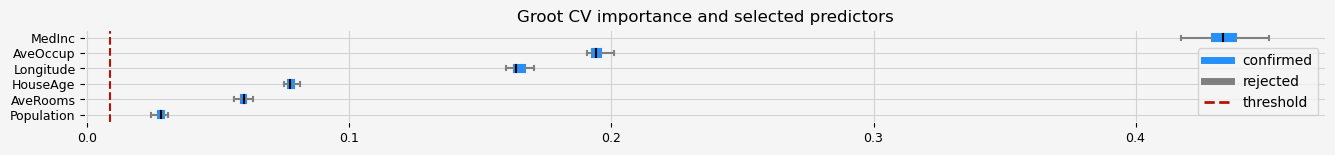

[13]:

%%time

# No Sampling

X = data.drop("target", axis=1)

y = data.target

# GrootCV

feat_selector = arfsgroot.GrootCV(

objective="rmse", cutoff=1, n_folds=5, n_iter=5, silent=True

)

feat_selector.fit(X, y, sample_weight=None)

print(feat_selector.get_feature_names_out())

fig = feat_selector.plot_importance(n_feat_per_inch=5)

# highlight synthetic random variable

fig = highlight_tick(figure=fig, str_match="random")

fig = highlight_tick(figure=fig, str_match="genuine", color="green")

plt.show()

['MedInc' 'HouseAge' 'AveRooms' 'Population' 'AveOccup' 'Longitude']

CPU times: user 12min 19s, sys: 2.9 s, total: 12min 22s

Wall time: 3min 32s

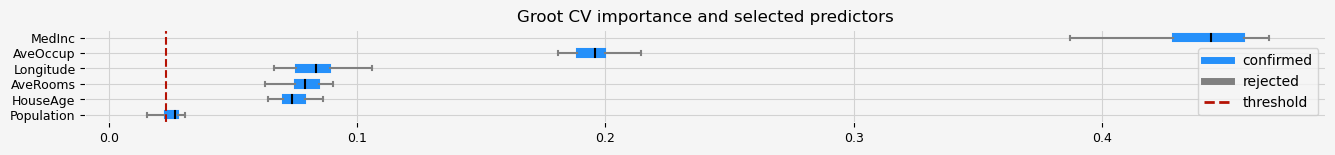

[14]:

%%time

X = data_rnd_samp.drop("target", axis=1)

y = data_rnd_samp.target

# GrootCV

feat_selector = arfsgroot.GrootCV(

objective="rmse", cutoff=1, n_folds=5, n_iter=5, silent=True

)

feat_selector.fit(X, y, sample_weight=None)

print(feat_selector.get_feature_names_out())

fig = feat_selector.plot_importance(n_feat_per_inch=5)

# highlight synthetic random variable

fig = highlight_tick(figure=fig, str_match="random")

fig = highlight_tick(figure=fig, str_match="genuine", color="green")

plt.show()

['MedInc' 'HouseAge' 'AveRooms' 'Population' 'AveOccup' 'Longitude']

CPU times: user 23.1 s, sys: 436 ms, total: 23.5 s

Wall time: 7.43 s

[15]:

%%time

X = data_g_samp.drop("target", axis=1)

y = data_g_samp.target

# GrootCV

feat_selector = arfsgroot.GrootCV(

objective="rmse", cutoff=1, n_folds=5, n_iter=5, silent=True

)

feat_selector.fit(X, y, sample_weight=None)

print(feat_selector.get_feature_names_out())

fig = feat_selector.plot_importance(n_feat_per_inch=5)

# highlight synthetic random variable

fig = highlight_tick(figure=fig, str_match="random")

fig = highlight_tick(figure=fig, str_match="genuine", color="green")

plt.show()

['MedInc' 'HouseAge' 'AveRooms' 'Population' 'AveOccup' 'Longitude']

CPU times: user 25.3 s, sys: 512 ms, total: 25.8 s

Wall time: 8.27 s

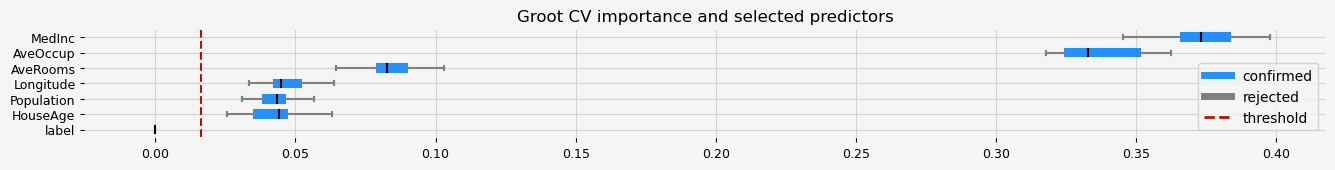

[16]:

%%time

X = data_isof_samp.drop("target", axis=1)

y = data_isof_samp.target

# GrootCV

feat_selector = arfsgroot.GrootCV(

objective="rmse", cutoff=1, n_folds=5, n_iter=5, silent=True

)

feat_selector.fit(X, y, sample_weight=None)

print(feat_selector.get_feature_names_out())

fig = feat_selector.plot_importance(n_feat_per_inch=5)

# highlight synthetic random variable

fig = highlight_tick(figure=fig, str_match="random")

fig = highlight_tick(figure=fig, str_match="genuine", color="green")

plt.show()

['MedInc' 'HouseAge' 'AveRooms' 'Population' 'AveOccup' 'Longitude']

CPU times: user 39.5 s, sys: 630 ms, total: 40.2 s

Wall time: 12.7 s